Zong Ren, Huang Xin Joint Editor

Baidu has now open-sourced its in-depth learning platform, Paddle, which has triggered a lot of interest among developers in the field of artificial intelligence, including some developers who had been practicing on Tensorflow and Caffe before. However, due to the fact that there are not many open source platforms for deep learning, as a developer is also a top priority for people who are eager to eat melons. I just want to know – how about this platform? How do people look at this platform? What is the difference between this platform and Tensorflow and Caffe?

Paddle itself has existed before the open source began in 2013, because Baidu Deep Laboratory found itself in deep neural network training, along with the rapid growth of training data such as advertising, text, images, and voice, traditional The single GPU-based training platform has been unable to meet the demand. For this reason, under the leadership of Xu Wei, the laboratory has established the Paddle (Parallel Asynchronous Distributed Deep Learning) multi-machine parallel GPU training platform.

But today's open-source Paddle is certainly not a simple model three years ago. Paddle may be an independent deep learning platform three years ago , and does not support the need to access data from other platforms. However, today Paddle has emphasized everywhere that its feature is to couple Spark with PADDLE. It is a heterogeneous distributed deep learning system based on Spark. And after “tight friction†with Baidu’s related businesses, it has iterated two versions: from the Spark on Paddle architecture version 1.0 to the Spark on PADDLE architecture version 2.0. According to the platform's open source rules, it was probably very handy inside Baidu. After fixing a series of bugs, the lab finally decided to open source Spark on PADDLE and heterogeneous computing platforms. As for why Baidu wants to open source, this reason we all understand

Deep learning platform currently has many bugs - attracting more developers to try and use deep learning technology is absolutely helpful to improve the level of Paddle.

â–Ž Outsiders' comments on this platformThe answer given by Jia Qing on Jia Qingyang is currently a relatively positive assessment.

1. Very high quality GPU code

2. Very good RNN design

3. The design is very clean, there is not much absorption, this is much better than TensorFlow.

4. Part of high-speed RDMA does not seem to be open source (possibly because RDMA has certain requirements for cluster design): Paddle/RDMANetwork.h at master · baidu/Paddle · GitHub

5. Design ideas are more like the first-generation DL framework, but considering that paddle has been around for a long time, this design has historical reasons.

5.1 config is a hard-code protobuf message, which may affect scalability.

5.2 can see a lot of interesting similar historical legacy design: the use of STREAM_DEFAULT macro, and then through TLS directed to the non default stream: Paddle/hl_base.h at 4fe7d833cf0dd952bfa8af8d5d7772bbcd552c58 · baidu/Paddle · GitHub (So Paddle off-the-shelf Does not support mac?)

5.3 The traditional coarse-grained forward/backward design (similar to Caffe) is used for gradient calculations. Some people may say "so paddle doesn't have auto gradient generation". This is wrong. The existence of autograd is not related to the granularity of op. In fact, TensorFlow is gradually turning back to coarse-grained operators after realizing the super-slow speed of fine-grained operators. Currently only see here. In short, it is a very solid framework. Baidu's development skills are still good.

It is estimated that many people have read Jia Qingyang's assessment. Here we post a former Baidu data engineer, polar perspective CTO Funing evaluation

Looked at, from the design concept and Caffe look like, but the network model is not as easy to define Caffe. The biggest contribution is to do a distributed, increase the speed of building a model. Further detailed feelings will have to look at the code and use it.

Another scholar who studies the deep learning contrasting with the above two viewpoints shows that

Tensorflow's architecture can be thought of as an upgraded version of theano. Theano is a few years earlier than Caffe. It was only Caffe's earliest training. At the same time, some successful convolutional neural network models have been released and thus received more attention. Tensorflow has nothing to do with Caffe. It may draw on some of Caffe's implementation techniques and is essentially nothing. Baidu is likely to be realized after Caffe's success. Caffe is imitated to a large extent. At the same time, trying to modify something to make it look different from Caffe.

I estimate that people who use Caffe will not vote for it. People who use other tools (tensorflow, keras, theano, torch, mxnet) will not vote for it. Everyone says a few days and then... After a month, look at its github. The amount of attention and the amount of code that someone can find on github can tell you if he can have any waves (you can see later if anyone uses him to participate in kaggle or other competitions or publish code in scientific research). Now almost every major company has released its own deep learning framework (or machine learning framework), such as Microsoft, Amazon, Yahoo, and there seems to be no movement.

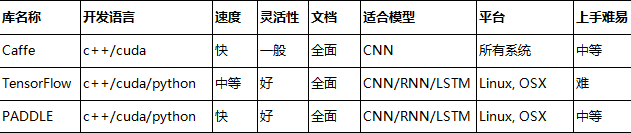

Lei Feng network (search "Lei Feng network" public concern) applied for the Paddle's today beta version, is still under review, although you can not directly download the experience, but the difference with the other two platforms is not missing. Based on our previous understanding of Caffe, Tensorflow, and the data released by Paddle today.

With interface voice

Caffe - cmd, matlab, python

Tensorflow - python, c++

Paddle - python, c++

(Note: Python is the main language used by developers, and only Caffe will use c++ when modifying the inside of the model. (If you have any objections, you are welcome to further exchanges between developers.)

In general1) Caffe is arguably the first industrial-grade deep learning tool. It started with the development language of excellent CNN implementation function developed by UC Berkely's Jia Yangqing at the end of 2013. In the field of computer vision, Caffe is still the most popular toolkit. .

Caffe's development language supports C++ and Cuda, which is fast, but due to some historic legacy architecture issues, it is not flexible enough. And support for recursive networking and language modeling is poor. Caffe supports all major development systems and the difficulty is relatively intermediate.

2) Tensorflow is Google's open source second-generation deep learning technology. It is an ideal RNN API implementation. It uses the symbolic graph method of vector operations, making the development speed can be very fast.

Tensorflow supports a good system with only a variety of Linux systems and OSX, but its relatively comprehensive support for language, including Python, C + + and Cuda, etc., developer documentation written without Caffe so comprehensive, so it is more difficult to get started.

3) The Baidu’s Paddle, as a heterogeneous distributed deep learning system based on Spark, has used the GPU and FPGA heterogeneous computing to improve the data processing capability of each machine, and temporarily obtained the industry’s “simple, clean, and stable designâ€. , Faster speed, smaller memory footprint.†The evaluation has an important relationship with it by using GPU and FPGA heterogeneous computing to improve the data processing capabilities of each machine. However, the specific performance, but also need to wait for a few days to observe the use of everyone's feelings.

The JUK universal Screw Terminal Block series has the typical features which are decisive for practical applications:

l The universal foot allows the terminal blocks to be easily snapped onto the NS35 or NS32 DIN Rail with G shape.

l Closed screw guide holes ensure screwdriver operation perfect.

l For terminal block with different wire cross-sectional areas, complete accessories are available, such as end plates, partition plates, etc.

l Potential distribution achieved by fixed bridges in the terminal center or insertion bridges in the clamping space.

l Same shape and pitch Grounding Terminal Blocks as the JUK universal series.

l Adopt ZB marker strip system,achieve unified identification.

High Current Terminal Din Rail,High Current Terminal Block,Screw Clamp Terminal,Screw Terminal Wire Connectors

Wonke Electric CO.,Ltd. , https://www.wkdq-electric.com