This article introduces the method and points of attention to using the data analysis tool pandas to read csv files to facilitate the rapid transition to the data processing stage.

pandas is an efficient data analysis tool. Based on its highly abstract data structure DataFrame, almost any operation you want can be performed on the data.

Since there are many formats of data sources in the real world, pandas also supports importing methods of different data formats. This article introduces how pandas imports data from csv files.

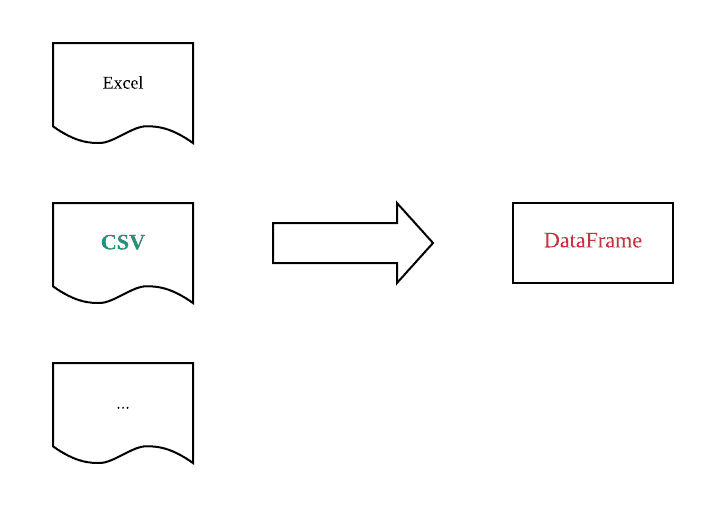

As can be seen from the above figure, what we have to do is to read in and convert the data stored in csv format into DataFrame format. pandas provides a very simple api function to achieve this function: read_csv().

1. Read the data in the csv file through the read_csv interface

Here is a simple example:

import pandas as pd

CSV_FILE_PATH ='./test.csv'

df = pd.read_csv(CSV_FILE_PATH)

print(df.head(5))

As long as you simply specify the path of the csv file, you can get the data df in DataFrame format. For data under ideal conditions, the import process is that simple!

Consider this situation below: Assuming that there are several invalid lines in the header of the csv file, the printed result may be as follows:

1 2 3 4

0 datetime host hit volume

12018-07-2409:00:00 weibo.com 20 1020

22018-07-2509:00:00 qq.com no20 1028

32018-07-2619:00:00 sina.com 25 1181

42018-07-2721:00:00 sohu.com 15 4582

Pandas regards the invalid data of [1,2,3,4] as the column name; in fact, we prefer to regard the data of [datetime,host,hit,volume] as the column name. In this case, the read_csv() function provides a parameter: skiprows, which is used to specify to skip the first few lines of the header of the csv file. Here, we can just skip 1 line.

import pandas as pd

CSV_FILE_PATH ='./test.csv'

df = pd.read_csv(CSV_FILE_PATH, skiprows=1)

print(df.head(5))

The results obtained are as follows:

datetime host hit volume

02018-07-2409:00:00 weibo.com 20 1020

12018-07-2509:00:00 qq.com no20 1028

22018-07-2619:00:00 sina.com 25 1181

32018-07-2721:00:00 sohu.com 15 4582

2. Deal with invalid data in csv file

Pandas can automatically infer the data type of each column to facilitate subsequent data processing. Take the data in the above text as an example, and pass the following code:

import pandas as pd

CSV_FILE_PATH ='./test.csv'

df = pd.read_csv(CSV_FILE_PATH)

print(df.head(5))

print('datatype of column hit is:'+ str(df['hit'].dtypes))

The result:

datetime host hit volume

02018-07-2409:00:00 weibo.com 20 1020

12018-07-2509:00:00 qq.com 20 1028

22018-07-2619:00:00 sina.com 25 1181

32018-07-2721:00:00 sohu.com 15 4582

datatype of column hit is: int64

Pandas judges the data type of the hit column to be int64, which is obviously convenient for our future operations on the data in this column. But in actual situations, we often face the problem of missing data. If this happens, we often use some placeholders to express it. Suppose, we use the missing placeholder to indicate missing data, and still use the above code to explore what will happen:

datetime host hit volume

02018-07-2409:00:00 weibo.com 20 1020

12018-07-2509:00:00 qq.com 20 1028

22018-07-2619:00:00 sina.com missing missing

32018-07-2721:00:00 sohu.com 15 4582

datatype of column hit is:object

Since the missing string appears in the hit column, pandas judges the data type of the hit column to be object. This will affect our calculations on the column of data. For example, suppose we want to calculate the sum of the first two rows of data in the hit column, the code is as follows:

print(df['hit'][0]+ df['hit'][1])

The result is:

2020

Originally, what we wanted was the result of a mathematical operation, but what we got was a string concatenation result. This is due to the serious impact of data type judgment errors. In this case, the read_csv() function also provides a simple processing method. You only need to specify placeholders through the na_value parameter, and pandas will automatically convert these placeholders into NaN during the process of reading in data, so as not to Affect pandas's correct judgment of the column data type. Sample code:

import pandas as pd

CSV_FILE_PATH ='./test.csv'

df = pd.read_csv(CSV_FILE_PATH, skiprows=0, na_values=['missing')

print(df.head(5))

print('datatype of column hit is:'+ str(df['hit'].dtypes))

print(df['hit'][0]+ df['hit'][1])

The results are as follows:

datetime host hit volume

02018-07-2409:00:00 weibo.com 20.01020.0

12018-07-2509:00:00 qq.com 20.01028.0

22018-07-2619:00:00 sina.com NaN NaN

32018-07-2721:00:00 sohu.com 15.04582.0

datatype of column hit is: float64

40.0

As you can see, pandas converted all the missing units in the data set to NaN and successfully determined the data type of the hit column.

3. Summary

Through a simple read_csv() function, you can actually do the following things:

Read the csv file locally through the specified file path, and convert the data into DataFrame format

Correct the head of the data set (column)

Correctly handle missing data

Infer the data type of each column

Of course, the read_csv() function has a series of other parameters to deal with various situations. Students who encounter specific problems can refer to its interface guide.

64V Battery Pack ,Lithium Battery Box,Lithium Power Pack,Jackery Battery Pack

Zhejiang Casnovo Materials Co., Ltd. , https://www.casnovo-new-energy.com